What do number conversions cost?

published at 16.03.2023 17:14 by Jens Weller

Save to Instapaper Pocket

And so the devil said: "what if there is an easier design AND implementation?"

In the last two blog posts I've been exploring some of the ways to implement a certain type that has a string_view and holds a conversion to a type in a variant or any. And my last blog post touched on conversions. And that made me wonder, what if I did not have a cache in the type for conversions? The memory foot print would be much smaller, and implementation could be simple to convert in a toType function on demand. This then would essentially be a type that holds a string_view, but offers ways to convert this view to a type. Adding a cache to hold the converted value is in this case not necessary, as this is done on demand.

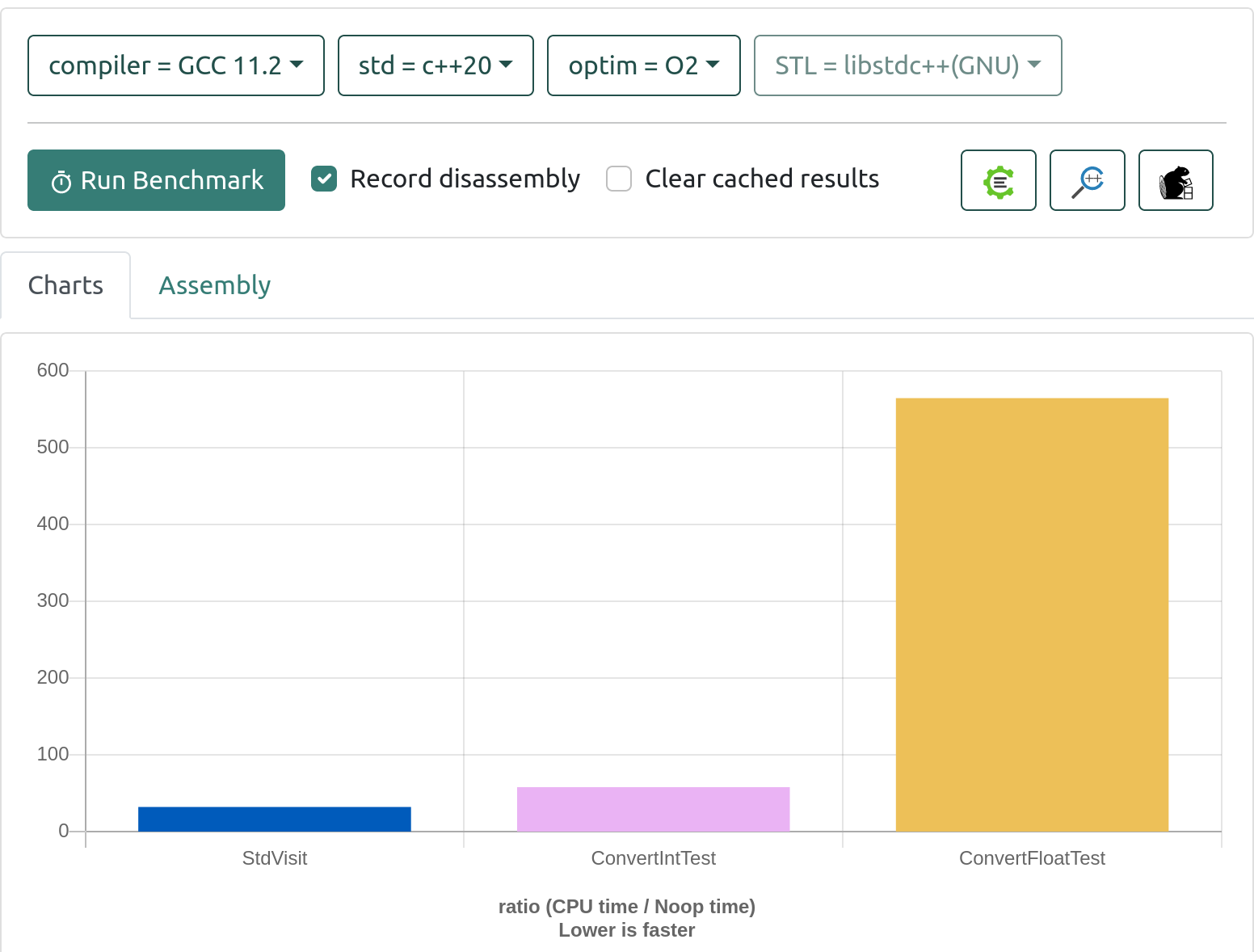

Which made me wonder, how expensive are conversions? Like to int or float? So this time the code is a bit different. The first block is still running on std::visit, doing no conversions. The other two blocks are doing something different: they have only std::string_view instances which then get converted to int or float. So its hard to compare them directly, but it shows that the access to the cache is much faster then doing the conversion.

And the result on gcc 11.2:

static void ConvertIntTest(benchmark::State& state) {

// Code before the loop is not measured

std::string str("long,3690,2650,58801,views");

std::vector< std::string_view> v;v.push_back(std::string_view(&str[5],4));

v.push_back(std::string_view(&str[10],4));

v.push_back(std::string_view(&str[5],4));v.push_back(std::string_view(&str[15],5));

size_t x = 0, i = 0;

auto size = v.size();

bool error = false;

for (auto _ : state) {

auto& lv = v[i % size];

int val;

auto [ptr, ec] = std::from_chars(lv.data(),lv.data()+lv.size(),val);

//if(ec == std::errc())

//val = atoi(lv.data());

x+= val;

i++;

benchmark::DoNotOptimize(i);

benchmark::DoNotOptimize(x);

benchmark::DoNotOptimize(val);

}

}

BENCHMARK(ConvertIntTest);//*/

static void ConvertFloatTest(benchmark::State& state) {

// Code before the loop is not measured

std::string str("long,36.9,26.5,5.881,views");

std::vector< std::string_view> v;v.push_back(std::string_view(&str[5],4));

v.push_back(std::string_view(&str[10],4));

v.push_back(std::string_view(&str[5],4));v.push_back(std::string_view(&str[15],5));

size_t x = 0,i = 0;

auto size = v.size();

bool error = false;

for (auto _ : state) {

auto& lv = v[i % size];

float val;

auto [ptr, ec] = std::from_chars(lv.data(),lv.data()+lv.size(),val);

//val = std::atof(lv.data());

//if(ec == std::errc())

x+= val;

i++;

benchmark::DoNotOptimize(i);

benchmark::DoNotOptimize(x);

benchmark::DoNotOptimize(val);

}

}

BENCHMARK(ConvertFloatTest);

Looking at this, doing conversions on demand without caching is the right strategy when they are rare. Saving the memory and the time to store the value could be worth it, if an instance of the type mostly is only queried for a converted value once. For floating point the conversion is so costly, that having a cache might be the best design. But if its a common access pattern for the type to have this queried, a cache will be the way better strategy. And notice that I didn't do error checking, in practice you'd need to do that.

Though, that isn't what this test exactly measures. It measures the conversions vs. the access to the cache currently. But it gives interesting results.

And there is a difference between from_chars and atoi/atof: the first is a lot slower then the second for floats: 600 "cpu_time"* vs. 329 when running with out the int conversion test. But again, using a gcc 12.2 instead of 11.2, this gets faster, from_chars is now 74.9 of cpu_time. And std::from_chars offers more control and error handling is slightly better. For int its the other way, 58.8 for from_chars vs 77.5 for using atoi. So if speed is really important for you, you'd have to measure which method is faster and better for your code on your platform.

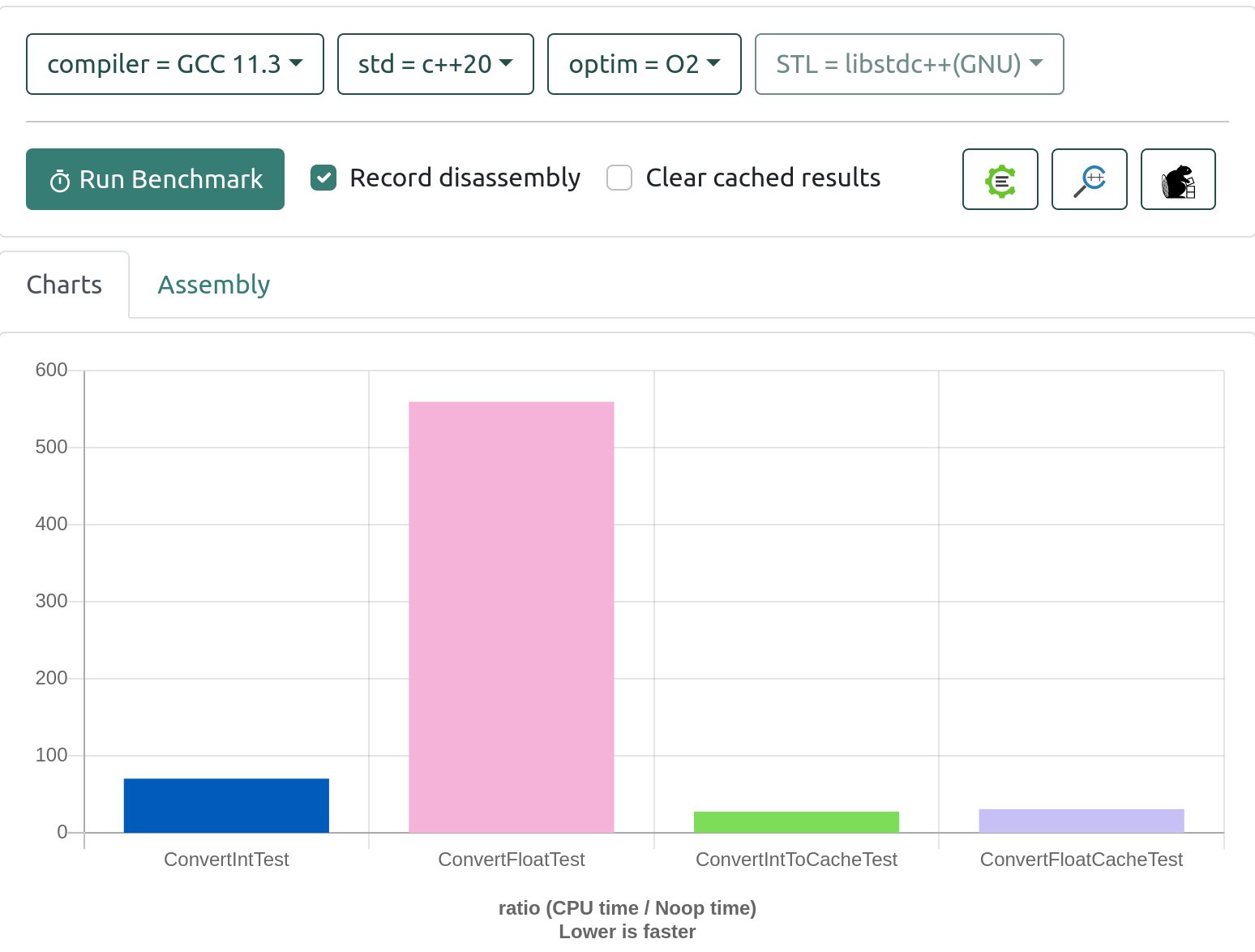

Now, lets try to measure caching vs. on demand. For this a std::vector with int/float is created and it will just store what has been converted in the first run:

...

std::vector< float> fv; fv.reserve(4); bool first = true; size_t x = 0,i = 0; auto size = v.size(); for (auto _ : state) { if(first) { auto& lv = v[i % size]; float val; auto [ptr, ec] = std::from_chars(lv.data(),lv.data()+lv.size(),val); //val = std::atof(lv.data()); //if(ec == std::errc()) x+= val; fv.push_back(val); if(i >0 && i%size == 0) first = false; benchmark::DoNotOptimize(val); } else x += fv[i%size];

Link to this code on godbolt. Links to quick-bench seem to time out quickly, so I'll try to use godbolts link-in-the-url feature to preserve the tests.

In this case the std::visit code does not make much sense anymore, so this graph shows the conversion code with and without cacheing on gcc 11:

As you can see, the cost of the first conversion is present, but averages over this test out. So if frequently accessed, the cache is of course better. While I'm just playing around here with code, you may want to think about where in your code you do convert, and if adding a cache could speed things up for you?

*: quick bench does not tell the time unit this is measured in, except "cpu_time". And it does actually give you a value based on the time it took to run an empty test vs. yours. That way it will scale better with a stronger CPU. And makes it better comparable.

Join the Meeting C++ patreon community!

This and other posts on Meeting C++ are enabled by my supporters on patreon!